Linear Regression - Mathematical and Statistical Overview

Linear Regression - Mathematical and Statistical Overview

Table of Content

Remarks

I’ve recently sign up for machine learning this semester, I don’t really know what to expect but I’ll try my best anyway. While I had initial reservations about diving into this course so early, I’ve decided to embrace the challenge, and there were really no other choice honestly. I believe that true mastery in machine learning comes from a deep understanding of the underlying concepts, not just from using pre-built libraries.

In this post, I’ll try my best to provide a comprehensive overview of Linear Regression, covering its mathematical foundations, common solution approaches, and real-world applications. I’ll delve into the theory and concepts, while practical examples of Linear Regression will be covered in another post which will also be my Linear Regression Assignment for the course.

I, What is Regression?

Regression is a statistical technique used for modeling and analyzing relationships between a dependent variable (target) and one or more independent variables (predictors or features). In essence, regression is used to predict the value of the dependent variable based on the values of the independent variables. It plays a key role in both prediction and forecasting, as well as understanding the relationships within data. In its simplest form and for the context of machine learning, if you remember one of those problems back in highschool math where you have to find an equation to fit certain points in a graph, then thats a start! Some more specific examples involve using tax rate, inflation, etc… to predict house prices, or market trend. The general participants in a regression problem is as follow:

\[\begin{gather*} y : \text{ The target variable - which in this case would always be continuous} \quad \\ \mathbf{x} = (x_1, x_2, \ldots, x_d)^T \in \mathbb{R}^d : \text{ The predictive variables - could be continuous or discrete} \quad \end{gather*}\]

This is a fundamental technique in statistical modeling, to understand modern and machine learning approaches that leveraged on this concept, we must first grasp Classic Regression. Which often refers to a few main types of regression:

- Linear Regression: A regression technique that models the relationship between independent variables and a dependent variable as a linear function.

- Assumes linearity in data, meaning the relationship between predictors and the target variable is modeled as a straight line.

- Uses Ordinary Least Squares (OLS) to estimate the coefficients by minimizing the sum of squared residuals.

- General form:

\[\begin{equation} y = \beta_0 + \beta_1 x_1 + \beta_2 x_2 + \ldots + \beta_d x_d + \epsilon \quad \end{equation}\]

- Non-linear Regression: A regression technique used to model relationships between variables that do not follow a straight line, but rather involve more complex relationships.

- The model can involve exponential, logarithmic, polynomial, or other forms of non-linear relationships.

- It uses iterative algorithms to fit the parameters since the relationship is not a simple linear function (eg. Newton Raphson).

- General form:

\[\begin{equation} y = \beta_0 + \beta_1 x_1 + \beta_2 x^2_2 + \ldots + \beta_d x^d_d + \epsilon \quad \end{equation}\]

- Single Regression: Regression with a single dependent variable and one independent variable.

\[x \in \mathbb{R} \text{ and } y \in \mathbb{R} \quad\]

- Multiple Regression: Regression analysis where there is one dependent variable and multiple independent variables.

\[x \in \mathbb{R}^d \text{ and } y \in \mathbb{R} \quad\]

- Multivariate Regression: Regression analysis with multiple dependent variables and multiple independent variables.

\[x \in \mathbb{R}^d \text{ and } y \in \mathbb{R}^c \quad | \quad c > 1 \quad\]

II, Linear Regression - General Concept

Prediction Function

In linear regression, the prediction function is typically written as:

\[\begin{equation} h_\theta(x) = \theta_0 + \theta_1 x_1 + \ldots + \theta_d x_d \quad \end{equation}\]

Here, \(\theta_0\) represents the intercept term.

Intercept Term Inclusion

In practice, it’s common to “add a column of ones to the data”. This means we set \(x_0 \equiv 1\) for all data points, allowing us to rewrite the prediction function \(h_\theta(x)\) as:

\[\begin{equation} h_\theta(x) = \sum_{i=0}^{d} \theta_i x_i = \boldsymbol{\theta}^T \mathbf{x} \quad \end{equation}\]

\[\begin{gather*} \text{Where } \boldsymbol{\theta} \text{ and } \mathbf{x} \text{ are vectors: } \quad \\ \boldsymbol{\theta} = [\theta_0, \theta_1, ..., \theta_d]^T \text{ and } \mathbf{x} = [1, x_1, ..., x_d]^T \quad \end{gather*}\]

This practice means a few things for us:

- Mathematical convenience: By adding a column of 1s, we can represent the intercept term as a coefficient in the linear regression equation. This simplifies the calculations and makes the model more consistent.

- Flexibility: Including an intercept term allows the model to account for a baseline level of the dependent variable, even when the independent variables are zero. This can be useful in many real-world scenarios.

Ordinary Least Squares (OLS)

The Ordinary Least Squares (OLS) method is used to determine the best-fitting line by minimizing the difference between the predicted values and the actual values in the training data. The training data with \(N\) data points is given in the format below:

\[\begin{equation} (x_1,y_1),(x_2,y_2), \ldots ,(x_N,y_N) \quad \end{equation}\]

To estimate the coefficients \(\boldsymbol{\theta} \in \mathbb{R}^{d+1}\), we need to minimize the error of the model given the training data. The error is captured using a cost function \(J(\theta)\).

\[\begin{equation} J(\theta) = \frac{1}{2N} \sum_{n=1}^{N} (h_\theta(x_n) - y_n)^2 \quad \end{equation}\]

Note that we use the constant term \(\frac{1}{2N}\) here to simplifies the calculation which will involve derivatives. In essence, you can use \(\frac{1}{2}, \frac{1}{N} \text{ and } \frac{1}{2N}\), which should all be valid in context of MSE.

The objective of this practice is to minimize the cost function (aka Mean Squared Error - MSE) This means finding the parameter vector \(\boldsymbol{\theta}\) that results in the smallest possible value of \(J(θ)\). In other words, we are trying to find the best coefficients that lead to the least amount of error between the predicted and actual values.:

\[\begin{equation} J(\theta) \rightarrow \min_{\theta} \quad \end{equation}\]

Extra: Approach with Maximum Likelihood Estimation (MLE)

To have a clear path towards finding the best performing coefficients, we can use the Maximum Likelihood Estimation (MLE) approach. In practice, to predict the target \(y\) for each data point \(x\), assume we have prediction function \(h_\theta(x)\) with error term of \(\mathbf{e}\) (Meaning that we assume this prediction function gives output that is \(\mathbf{e}\) off from the actual target \(y\)):

\[\begin{equation} y = h_\theta(x) + \mathbf{e} \quad \end{equation}\]

So assume \(\mathbf{e}\) follows a one-dimensional Normal Distribution:

\[\begin{equation} \mathbf{e} \sim \mathcal{N}(0, \sigma^2) \quad \end{equation}\]

To find the MLE, we define the Log-Likelihood function given the data as:

\[\begin{aligned} \ell(\theta) & =\sum_{n=1}^N \log \left[\frac{1}{\sqrt{2 \pi \sigma^2}} \exp \left(-\frac{\left(y_n-h_\theta\left(x_n\right)\right)^2}{2 \sigma^2}\right)\right] \\ & =N \log \left(\frac{1}{\sqrt{2 \pi \sigma^2}}\right)-\frac{1}{2 \sigma^2} \sum_{n=1}^N\left(y_n-h_\theta\left(x_n\right)\right)^2=: T_1-T_2 \quad \end{aligned}\]

Given that \(T_1\) is a constant term, maximizing \(\ell(\theta)\) equates to minimizing:

\[T_2=\frac{1}{2 \sigma^2} \sum_{n=1}^N\left(y_n-h_\theta\left(x_n\right)\right)^2=J(\theta) \quad\]

Given that \(J(\theta)\) is the cost function in OLS:

- Assuming our error term \(\mathbf{e}\) actually follows a Normal Distribution then the MLE approach will help us to achieve OLS.

- We see that in no way is \(\theta\) is dependant on the variance \(\sigma^2\). Therefore even in cases of ambiguous variance, we can still reasonably estimate \(\theta\).

III, Linear Regression - Common Approaches

Single Regression - Sum of Squares (Normal Equation)

Given dataset with \(N\) data points, with signature \(x_n,y_n \in \mathbb{R}\). This is a classic example of Single Regression, a single independent variable \(x\) and a single dependent variable \(y\). With that knowledge, we calculate the Expected Value and variance as:

\[\mathbb{E}(y \mid X=x)=h_\theta(x)=\theta_0+\theta_1 x ; \quad \operatorname{var}(y \mid X=x)=\sigma^2 \quad\]

In which, \(\theta_0\) is called the Intercept Term, \(\theta_1\) is called the Slope. We continue by calculating the Sample Variance and Sample Covariance:

\[\begin{aligned} \bar{x} & =\frac{1}{N} \sum_{n=1}^N x_n \quad \bar{y}=\frac{1}{N} \sum_{n=1}^N y_n \\ S X X & =\sum_{n=1}^N\left(x_n-\bar{x}\right)^2 \quad \text { - Sample Variance } \\ S X Y & =\sum_{n=1}^N\left(x_n-\bar{x}\right)\left(y_n-\bar{y}\right) \quad \text { - Sample Covariance }\quad \end{aligned}\]

So, when:

\[\mathbf{X}=\left(\begin{array}{cc} 1 & x_1 \\ 1 & x_2 \\ \vdots & \vdots \\ 1 & x_N \end{array}\right) \quad \mathbf{y}=\left(\begin{array}{c} y_1 \\ y_2 \\ \vdots \\ y_N \end{array}\right) \quad\]

Then:

\[\left(\mathbf{X}^T \mathbf{X}\right)=\left(\begin{array}{cc} N & \sum_{i=1}^N x_i \\ \sum_{n=1}^N x_n & \sum_{n=1}^N\left(x_n\right)^2 \end{array}\right) \quad \mathbf{X}^T \mathbf{y}=\binom{\sum_{i=n}^N y_n}{\sum_{i=n}^N y_n^2}\quad\]

By direct computation:

\[\left(\mathbf{X}^T \mathbf{X}\right)^{-1}=\frac{1}{S X X}\left(\begin{array}{cc} \frac{1}{N} \sum_{n=1}^N\left(x_n\right)^2 & -\bar{x} \\ -\bar{X} & 1 \end{array}\right) \quad\]

Therefore:

\[\hat{\theta}=\binom{\hat{\theta}_0}{\hat{\theta}_1}=\left(\mathbf{X}^T \mathbf{X}\right)^{-1} \mathbf{X}^T \mathbf{y}=\binom{\bar{y}-\bar{x} \hat{\theta}_1}{S X Y / S X X} \quad\]

Note: Sum of Squares is the simplest case of Normal Equation.

Multiple Regression

When applied to Single Regression, OLS usually solves the problem using direct calculation with the Sum of Squares as demonstrated above. However when moving to Multiple Regression, we must be able to generalize the concept of OLS to effectively solve such problems, below I will go in depth to some of the most common methods to achieve OLS in context of Multiple Regression:

Normal Equation

The Normal Equation is a powerful method for finding the optimal parameters (weights) in a linear regression model. It provides a systematic way to minimize the difference between the predicted values and the actual observed values in the dataset.

Say we are given dataset with dimensionality of \(X \times y \subset \mathbb{R}^d \times \mathbb{R}^n\) where:

\[\mathbf{X}=\left(\begin{array}{c}\left(x_1\right)^T \\ \left(x_2\right)^T \\ \vdots \\ \left(x_N\right)^T\end{array}\right)=\left(\begin{array}{ccccc}1 & x_{11} & x_{21} & \cdots & x_{d2} \\ 1 & x_{12} & x_{22} & \cdots & x_{d2} \\ \vdots & \vdots & \ddots & \vdots & \\ 1 & x_{1N} & x_{2N} & \cdots & x_{dN}\end{array}\right) \\ \text{ and } \mathbf{y}=\left(\begin{array}{c}y_1 \\ y_2 \\ \vdots \\ y_N\end{array}\right) \quad\]

Note that we have already implemented the inclusion of the Intercept Term, hence the column of ones. Therefore Matrix \(X\) here can be considered an Design Matrix \(X \in \mathbb{R}^{N \times (d+1)}\).

Given the context, our cost function \(J(\theta)\) is re-written as:

\[J(\theta)=\frac{1}{2N}(\mathbf{X} \theta-\mathbf{y})^T(\mathbf{X} \theta-\mathbf{y}) \quad\]

Let the gradient of the cost function \(J(\theta) \text{ w.r.t } \theta\):

\[\nabla J(\theta)=\frac{1}{N}\left( \mathbf{X}^T \mathbf{X} \theta-\mathbf{X}^T \mathbf{y} \right) \quad\]

The gradient tells us the direction in which the cost function is increasing. When the gradient equals zero, we are at a minimum (or maximum), and thus, we can derive the optimal parameters \(\hat{\theta}\). To minimize the cost function, we solve for \(\nabla J(\theta) = 0\) and derive equation:

\[\hat{\theta}=\left(\mathbf{X}^T \mathbf{X}\right)^{-1} \mathbf{X}^T \mathbf{y} \quad\]

The equation above is called the Normal Equation. We notice how in the process of solving the Normal Equation, we encounter the inverse operation \((\mathbf{X}^T \mathbf{X})^{-1}\).

- If this inverse exists, only then can we solve for \(\hat{\theta}\) by direct computation (The best case scenario).

- If this inverse does not exist, meaning that \((\mathbf{X}^T \mathbf{X})\) is not Full Rank, this leads to several implications:

- There exist a linear dependency between the columns of \(X\) (ie. multicollinearity). This means that some features are linearly dependent on others, which can cause issues in estimating the coefficients reliably.

- We could find a way to reduce the dimensionality of \(X\) by removing dependent features so that the Design Matrix \(X\) is Full Rank, enabling the computation of the inverse.

Without delving into the specifics of dimensionality reduction and feature selection, we approach the problem by calculating Expectation. Let \(\mathbb{E}(\hat{\theta} \mid \mathbf{X})\) be the Expectation given \(\hat{\theta}\) depending on \(X\)

\[\begin{aligned} \mathbb{E}(\hat{\theta} \mid \mathbf{X}) & =\mathbb{E}\left(\left(\mathbf{X}^T \mathbf{X}\right)^{-1} \mathbf{X}^T \mathbf{y} \mid \mathbf{X}\right) \\ & =\left(\left(\mathbf{X}^T \mathbf{X}\right)^{-1} \mathbf{X}^T\right) \mathbb{E}(\mathbf{y} \mid \mathbf{X}) \quad \\ & =\left(\mathbf{X}\left(\mathbf{X}^T \mathbf{X}\right)^{-1}\right) \mathbf{x} \theta \\ & =\theta\end{aligned}\]

The equation shows how we can compute the Expected Value of \(\hat{\theta}\) conditioned on \(X\). By ensuring that \(\mathbb{E}(\hat{\theta} \mid \mathbf{X}) = \theta\), we’re verifying that our estimation \(\hat{\theta}\) is unbiased. This means that, on average, it accurately reflects the true parameter values.

By using formula: \(\operatorname{var}(\mathbf{a}+\mathbf{A} \mathbf{y})=\mathbf{A} \operatorname{var}(\mathbf{y}) \mathbf{A}^T\), with \(\mathbf{a},\mathbf{y}\) as vectors and \(\mathbf{A}\) as a constant matrix, we have:

\[\begin{aligned} \operatorname{var}(\hat{\theta} \mid \mathbf{X}) & =\operatorname{var}\left(\left(\mathbf{X}^T \mathbf{X}\right)^{-1} \mathbf{X}^T \mathbf{y} \mid \mathbf{X}\right) \\ & =\left[\left(\mathbf{X}^T \mathbf{X}\right)^{-1} \mathbf{X}^T\right] \operatorname{var}(\mathbf{y} \mid \mathbf{X})\left[\mathbf{X}\left(\mathbf{X}^T \mathbf{X}\right)^{-1}\right] \quad \\ & =\left[\left(\mathbf{X}^T \mathbf{X}\right)^{-1} \mathbf{X}^T\right] \sigma^2 \mathbf{X}\left[\mathbf{X}\left(\mathbf{X}^T \mathbf{X}\right)^{-1}\right] \\ & =\sigma^2\left[\left(\mathbf{X}^T \mathbf{X}\right)^{-1} \mathbf{X}^T \mathbf{X}\left(\mathbf{X}^T \mathbf{X}\right)^{-1}\right] \\ & =\sigma^2\left(\mathbf{X}^T \mathbf{X}\right)^{-1}\end{aligned}\]

In which we see how the variance of \(\hat{\theta}\) only depends on \(X\), not \(y\). The variance formula derived in the context of the estimation demonstrates that the uncertainty in our estimates only depends on the Design Matrix and not on the actual observed values. This characteristic is crucial for understanding how the choice of features influences the reliability of our estimates.

In summary, the Normal Equation provides a comprehensive method to derive the best-fitting parameters in linear regression by using matrix operations. It emphasizes the relationships among the independent variables and showcases how these relationships affect the predictions and their associated uncertainties. Understanding the Normal Equation not only enhances our comprehension of OLS but also equips us to handle practical scenarios, such as multicollinearity, that may arise when dealing with multiple regression problems.

QR Decomposition

In practice, the nature of the present of inverse operations in Normal Equation often leads to Numerical Instability, especially in larger datasets. This effect can result in inaccurate estimates of the regression coefficients, compromising the reliability of the model. To mitigate these issues, QR Decomposition is often employed in linear regression analysis.

QR Decomposition is a concept in Linear Algebra. “QR Decomposition - Wikipedia”. Some well-known QR Decomposition algorithm includes: Householder, Gram-Schmidt, etc…

Going back to our Design Matrix \(X \in \mathbb{R}^{N \times (d+1)}\). Assume we can find matrix \(Q \in \mathbb{R}^{N \times (d+1)}\) and matrix \(R \in \mathbb{R}^{(d+1) \times (d+1)}\) such that: \(X = QR\), where:

- \(Q\) is an orthonormal column matrix, meaning \(Q^TQ = I\).

- \(R\) is an upper triangular matrix, meaning that all elements above the main diagonal equals 0.

With matrices \(Q\) and \(R\) (Given \(R\) is Full Rank) satisfied, we can denote:

\[\begin{aligned} \mathbf{X} & =\mathbf{Q R} \\ \mathbf{X}^T \mathbf{X} & =(\mathbf{Q R})^T(\mathbf{Q R})=\mathbf{R}^T \mathbf{Q}^T \mathbf{Q} \mathbf{R}=\mathbf{R}^T \mathbf{R} \quad \\ \left(\mathbf{X}^T \mathbf{X}\right)^{-1} & =\left(\mathbf{R}^T \mathbf{R}\right)^{-1}=\mathbf{R}^{-1}\left(\mathbf{R}^T\right)^{-1} \\ \hat{\theta} & =\left(\mathbf{X}^T \mathbf{X}\right)^{-1} \mathbf{X}^T \mathbf{y} \\ & =\mathbf{R}^{-1}\left(\mathbf{R}^T\right)^{-1}(\mathbf{Q R})^T \mathbf{y} \\ & =\mathbf{R}^{-1}\left(\mathbf{R}^T\right)^{-1} \mathbf{R}^T \mathbf{Q}^T \mathbf{y} \\ & =\mathbf{R}^{-1} \mathbf{Q}^T \mathbf{y} .\end{aligned}\]

From the last equation, we can derive:

\[\mathbf{R} \hat{\theta}=\mathbf{R} \mathbf{R}^{-1} \mathbf{Q}^{T} \mathbf{y}=\mathbf{Q}^{T} \mathbf{y}. \quad\]

Given \(R\) is an upper triangular matrix, we can easily solve for \(\hat{\theta}\) with Gaussian Elimination, for example:

\[R \hat{\theta} = \begin{pmatrix} 4 & 2 & 1 \\ 0 & 3 & 1 \\ 0 & 0 & 4 \end{pmatrix} \begin{pmatrix} \hat{\theta}_1 \\ \hat{\theta}_2 \\ \hat{\theta}_3 \end{pmatrix} = Q^T y = \begin{pmatrix} 4 \\ 11 \\ 8 \end{pmatrix} \quad\]

Extra: Householder

The Householder algorithm transforms a given matrix into an upper triangular form by applying a sequence of reflections. Each reflection is designed to zero out specific elements below the diagonal, thereby simplifying the matrix. Process:

- For each column of the matrix \(A\), a Householder vector is constructed. This vector reflects the column onto a multiple of the standard basis vector (essentially, it “flattens” the column).

- The transformation is defined using a Householder matrix \(Q_j\), which is an orthogonal matrix that represents the reflection.

- The original matrix \(R\) updated by applying the Householder transformation, while the orthogonal matrix \(Q\) is accumulated by multiplying it with each Householder matrix’s transpose.

import numpy as np

def householder_qr(A):

m, n = A.shape

Q = np.eye(m)

R = A.copy()

for j in range(n):

x = R[j:, j]

e = np.zeros_like(x)

e[0] = 1

u = x + np.sign(x[0]) * np.linalg.norm(x) * e

v = u / np.linalg.norm(u)

Q_j = np.eye(m)

Q_j[j:, j:] -= 2.0 * np.outer(v, v)

R = Q_j @ R

Q = Q @ Q_j.T

return Q, R

Think of the Householder transformation as using mirrors to reflect vectors. Each reflection aligns a column of the matrix with the direction of the coordinate axes, progressively creating zeros below the diagonal.

Extra: Gram-Schmidt

The Gram-Schmidt algorithm constructs an orthogonal basis from the columns of the matrix by iteratively orthogonalizing each column with respect to the previously computed columns. Process:

- For each column of matrix \(A\), the algorithm subtracts projections of that column onto all previously computed orthogonal vectors.

- The resulting vector is then normalized to create a unit vector, which forms part of the orthogonal matrix \(Q\).

- The coefficients of these projections are stored in matrix \(R\), which contains the inner products of the original columns with the orthogonalized columns.

import numpy as np

def gram_schmidt_qr(A):

m, n = A.shape

Q = np.zeros((m, n))

R = np.zeros((n, n))

for j in range(n):

v = A[:, j]

for i in range(j):

R[i, j] = np.dot(Q[:, i], A[:, j])

v = v - R[i, j] * Q[:, i]

R[j, j] = np.linalg.norm(v)

Q[:, j] = v / R[j, j]

return Q, R

Imagine you are trying to create a set of orthogonal vectors from a set of linearly independent vectors. The Gram-Schmidt process helps by systematically removing components that align with existing vectors, thus ensuring that each new vector is orthogonal to the ones before it.

For more a more comprehensive view on QR Decomposition, you can refer to “Walter Gander - Algorithms for the QR-Decomposition”

Conclusion

In conclusion, Linear Regression is a powerful yet lightweight tool in the realm of machine learning and statistics. Its simplicity allows for quick implementations and interpretations, making it an excellent choice for understanding relationships between variables and providing a solid baseline for predictive modeling. Despite its effectiveness, it is essential to recognize the limitations inherent in Linear Regression, such as its assumptions of linearity and sensitivity to outliers, which can impact the model’s performance.

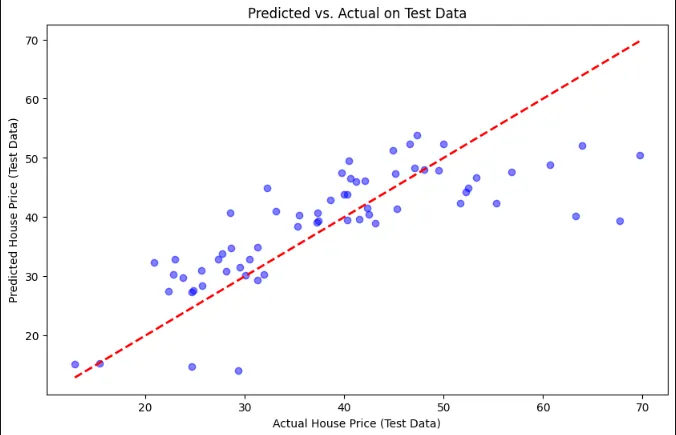

I will be covering some examples of Linear Regression as part of my Assignment post for my course, which you can find here MAT3533 - Linear Regression Assignment.

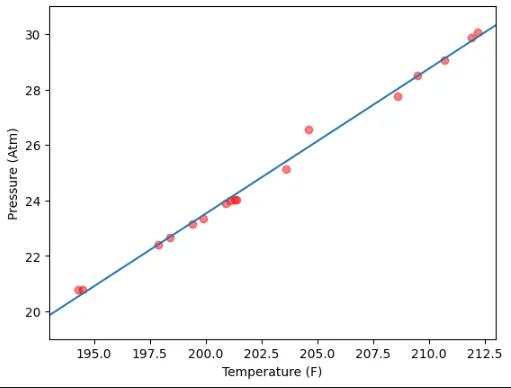

Furthermore I will also implement Linear Regression from scratch in Python, which you can learn more about here Linear Regression - Python implementation from scratch.

Thank you for reading to the end, if there are any mistakes or suggestions to the material, feel free to contact me at Contact.

Extras:

- L1, L2 Regularization for Linear Regression (Lasso, Ridge).

- Visualization techniques for Linear Regression.

- Data Preprocessing for Linear Regression.

References:

- “MAT3533 - Linear Regression Lecture - Prof. Cao Van Chung”

- “StatQuest with Josh Starmer”.

- “Walter Gander - Algorithms for the QR-Decomposition”

- Oleksandr Dorokhov, Lyudmyla Malyarets, Kadri Ukrainski and Dmytro Yevstrat, Estimation of Parameters in Regression Analysis Based on QR Decomposition of Rectangular Matrices by Householder Reflections.

- Xin Yan & Xiao Gang Su, Linear Regression Analysis - Theory and Computing.