My first bot - Tic Tac Toe - Part II

My first bot - Tic Tac Toe - Part II

My first bot! - Tic Tac Toe in Java with Swing - Part II

Prologue

If you are interested in the source code or trying out the program, you can check it out on my GitHub: yuk068 - TicTacToeGame

In this post, I will continue by going through how i made my very first bot for Tic Tac Toe. Which uses the minimax algorithm with alpha beta pruning that can basically always win / draw with the appropriate depth.

III, Tic Tac Toe bot - The brain

So TLDR, although I created the GUI and functionality for my Chess Game first, I soon realized that creating an entire chess bot was just something else… And at that point, I had 0 knowledge of making such bots. So I did some digging, and for this particular type of bot and game, one where It’s:

- Turn based, 2 players

- No or very little luck involved

- The game’s state can always be evaluated logically

- Each players or the entire game has a “score” that can logically tell if a players is winning / losing, usually a negative value is winning for one player and positive values are winning for the other while 0 is balanced / draw.

I discoverd an algorithm called “Minimax”. From my perspective, it essentially looks into the future - calculating every single outcome by evaluating the game’s state, all to find the absolute best move which leads to a desirable evaluation for the player that’s trying to maximize / minimize the score in their favour (hence the name - minimax). However, for a good bot, other than minimax, you’ll also need a good and robust evaluation function. Or in other words, the evaluation function is “the brain” while the minimax implementation is how the bot uses “the brain” to perceives different positions to make a decision.

1, Evaluation function

So to first make a bot, we almost always have to implement some way to evaluate a certain position. You might have seen them before with chess evaluation, where they have a certain value meter that is high when white is winning, low when black is winning and fluctuate between 0 when the position is equal. And while, almost every game like that requires very intricate and precise evaluation functions. Because I was inexperience and is very unfamiliar with these topics, my approach was very primitive and inefficient:

public int evaluateBoard() {

if (checkWin() == PLAYER_X) return PLAYER_X_WON;

else if (checkWin() == PLAYER_O) return PLAYER_O_WON;

else return DRAW; // Or GAME_ONGOING

}

Essentially, this evaluation function is very literal and shortsighted, It only checks if the game is over and the state of the game when its over. However, for something as simple as Tic Tac Toe, I believe that this approach is acceptable. Okay, so this function allows the bot to know what is the current state of the game, win?, loss,? draw? or still ongoing. But for now, this is all what we need.

Note that typically, the evaluation function can be considered the most important aspect of any bot that uses minimax. It’s essentially what makes a bot who it is, because you can basically code your perspective into the evaluation itself. For example, in chess, if I think making a bunch of Knight moves is good, then I’ll code the evaluation function in a way that take into account if in every position, is my Knight in a different position, and work its way up from there. Okay, that example is a bit over board and impractical, how about this? If I think capturing a piece with a Pawn is good, I pass the board to the evaluation function, if the Pawn has a legal move that can capture an enemy piece, then the evaluation function will react accordingly.

So all in all, I just want to say that evaluation function for more complex games is way harder to implement than this example in Tic Tac Toe. I’ve made the mistake of thinking that only with minimax, I can make a bot for every other similar games. Don’t make that mistake, making a good and accurate evaluation function all by yourself is no easy task, and now I have so much respect for people who can actually get them right. But just as I said, for now, this is all we need.

2, Minimax

The next step, after finishing the bot’s “brain”, we have to give it sight for it to make the sensible move. I don’t really want to go through the actually implementation of the algorithm itself because other people have done it far better than me, this video from Sebastian Lague helped out a lot: Sebastian Lague - Minimax and Alpha Beta pruning. Basically, minimax goes through something called a “Game tree” and needs 2 concrete arguments and a function:

- The game itself, and necessary information about the game

- The depth value

- The evaluation function, either from the game itself, or one somewhere else that can evaluation the position

To give you a better overview, this is the minimax algorithm implemented in my TicTacToeBot class:

public static int minimax(TicTacToeGame currentGame, int alpha, int beta, int depth, boolean turn) {

if (depth == 0 || currentGame.gameIsOver()) {

return currentGame.evaluateBoard();

}

if (turn) {

int maxEval = Integer.MIN_VALUE;

int eval;

for (int index : Utility.getAllEmptySquare(currentGame.getBoard())) {

int[] move = Utility.indexToCoordinates(index);

TicTacToeGame afterMove = Utility.newGameFromArgs(currentGame.turn, currentGame.makeMoveNewBoard(move[0], move[1]));

afterMove.turn = !afterMove.turn;

eval = minimax(afterMove, alpha, beta, depth - 1, afterMove.turn);

maxEval = Math.max(maxEval, eval);

alpha = Math.max(alpha, maxEval);

if (beta <= alpha) break;

}

return maxEval;

} else {

int minEval = Integer.MAX_VALUE;

int eval;

for (int index : Utility.getAllEmptySquare(currentGame.getBoard())) {

int[] move = Utility.indexToCoordinates(index);

TicTacToeGame afterMove = Utility.newGameFromArgs(currentGame.turn, currentGame.makeMoveNewBoard(move[0], move[1]));

afterMove.turn = !afterMove.turn;

eval = minimax(afterMove, alpha, beta, depth - 1, afterMove.turn);

minEval = Math.min(minEval, eval);

beta = Math.min(beta, minEval);

if (beta <= alpha) break;

}

return minEval;

}

}

As you can see, there are several interesting points (fir now, we will skip Alpha Beta pruning for simplicity sake, and I will touch on them more in the next segment of this post):

- So first, the game itself, pretty self-explanatory, this will depend on your concrete implementation of your game, but for my TicTacToeGame, it includes info like whose turn it is, the core int[][] board itself, and the width and length of the board…

- Next is the depth value, which means how far ahead is this algorithm searching this is an example for depth = 2:

- Say if it’s X’s turn, then this algorithm will first search and evaluate all possible moves for X, and for each of X’s moves, it will hypothetically make O’s moves too, and then it will also take into account all possible respond from O and then repeat evaluating for X again.

- This process will go as deep as the depth value, as you can see how the function calls itself recursively, until either depth is 0 (it has calculated all possible outcomes regarding it’s depth) or if the game is over.

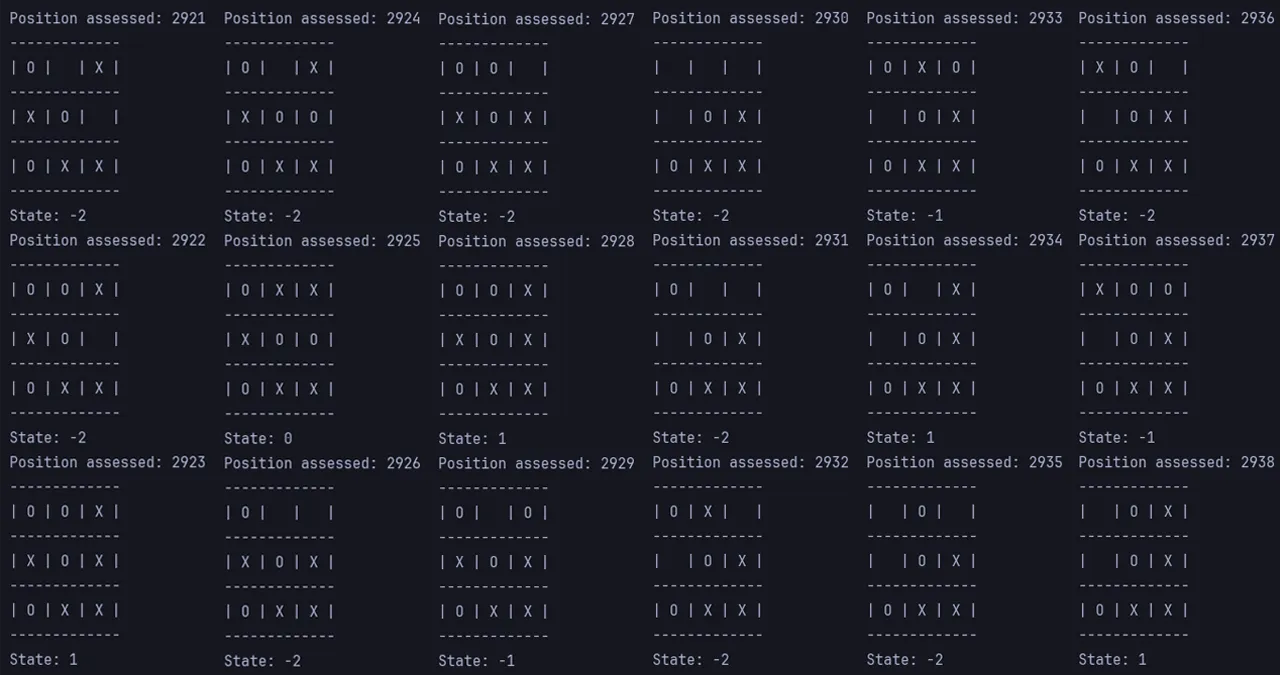

- As explained in III, 1, evaluationBoard() here is very primitive and barebone, which will result in a penalty of forcing the bot to be set to a higher depth if it wants to play moves accurately (from my testing, I can’t beat the bot anymore around depth 7-8 onwards).

So those are all the core ingredients that minimax takes into account, then it iterate through every single move, or in Tic Tac Toe’s case, every empty square and make the hypothetical appropriate move, you can see how with a high enough depth, it will traverse the “Game tree” to retrieve the outcome of every conceivable branches, it then take those results, compare each other and return the absolute best score for the player thats trying to maximize or minimize the evaluation. Further more, because how this logic is looking the best move for 1 player, thanks to recursive, it also assumes that the opponent will also play the best move, which will result it in returning the most sensible and logically accurate move possible with the right depth.

Although this implementation works for my project, note that it’s just because Tic Tac Toe is a very simple game with not a lot of varied positions. Just by increasing the board size, with high enough depth and a bad evaluation function, it can take literal hours just for minimax to calculate the right move. So be on the look out for that if you plan to also take up a similar project.

3, Alpha Beta pruning

Because calculation can so quickly multiplies with the right (or wrong) depth value, there is also something called Alpha Beta pruning, which significantly trims down the “Game tree” that the minimax algorithm have to search. I have to admit, I don’t fully understand it yet, and it’s not really important for this project because of reasons I have mentioned about how Tic Tac Toe is very simple. But just note that it is a very crucial part of minimax when it comes to optimization for more complex games, again, you can review my previous minimax implementation for the game and the video by Sebastian Lague also covers Alpha Beta pruning: Sebastian Lague - Minimax and Alpha Beta pruning.

IV, Tic Tac Toe bot - Incorporated into Gameplay

While the bot has a “brain” with “sight” already, It still needs a “hand” to actually make the move. And because of that, we need to actually incorporate the bot into the gameplay itself. First, while the minimax algorithm is in place, you might notice that it only returns the evaluation, rather than actually affecting anything about the game, when it make hypothetical moves for calculation sake, that was done on a new game, every time, complete detached from the game that’s actually being displayed by the GUI. Because of that, we first create the bot by initializing all it’s necessary attributes, but most importantly, attach a concrete TicTacToeGame to it:

public TicTacToeBot(TicTacToeGame game, int depth) {

this.depth = depth;

this.game = game;

}

This is also consistent with how TicTacToeGame is being handled in TicTacToeGameGUI, just as how starting a new game is seamless, creating a new bot for the game is also straight forward:

game = new TicTacToeGame();

bot = new TicTacToeBot(game, depth);

Just as how TicTacToeGame have method makeMove(int x, int y), and the player can use mouse to invoke this method, TicTacToeBot also has it’s own way to make moves on the board:

public void makeMove() {

if (!game.gameIsOver()) {

int bestScore = game.turn ? Integer.MIN_VALUE : Integer.MAX_VALUE;

int bestMoveIndex = -1;

boolean botTurn = game.turn;

for (int index : Utility.getAllEmptySquare(game.getBoard())) {

int[] move = Utility.indexToCoordinates(index);

TicTacToeGame afterMove = Utility.newGameFromArgs(botTurn, game.makeMoveNewBoard(move[0], move[1]));

afterMove.turn = !afterMove.turn;

int score = minimax(afterMove, Integer.MIN_VALUE, Integer.MAX_VALUE, depth, afterMove.turn);

if (botTurn && score > bestScore) {

bestScore = score;

bestMoveIndex = index;

} else if (!botTurn && score < bestScore) {

bestScore = score;

bestMoveIndex = index;

}

}

if (bestMoveIndex != -1) {

int[] bestMove = Utility.indexToCoordinates(bestMoveIndex);

game.makeMove(bestMove[0], bestMove[1]);

}

}

}

As you can see, It’s kind of similar to how the minimax algorithm itself works, also iterating through all the best moves to come to an decision, only that minimax is what allows the bot to actually know what that best move is. it then invoke makeMove(int x, int y) just as how a player would. All we do now is implement when the bot will move, in my case, right after the player has made a move. And all thanks to my previous attempts of making games, this time around the process was very smooth and there are very little code coupling, I can continue to add new features seamlessly just like how I implemented this bot.

V, Conclusion

So all in all… Making a bot is hella hard. This particular bot is the more basic and traditional approach. Nowadays there are stuff like machine learning, AI,… that are more inclined to these type of systems. Nevertheless, this is still my first ever functional bot, when it finally worked I was so damn happy, I jumped out of my chair and my heart was racing. I don’t think I will stop making more bots for more games like these, I just live for it right now. For this particular Tic Tac Toe game… I do plan to expand it to Caro Game or Gomoku, which is just a larger Tic Tac Toe board with some additional rules that make them less casual than Tic Tac Toe. At that point, making a bot for those kind of games is going to be much more challenging… I accept the challenge.